Under the General Data Protection Regulation (GDPR) enforced by the European Union, we are committed to safeguarding your personal data and providing you with control over its use.

GIGABYTE AI Solution with NVIDIA AI Enterprise

GIGABYTE AI Solutions with NVIDIA AI Enterprise

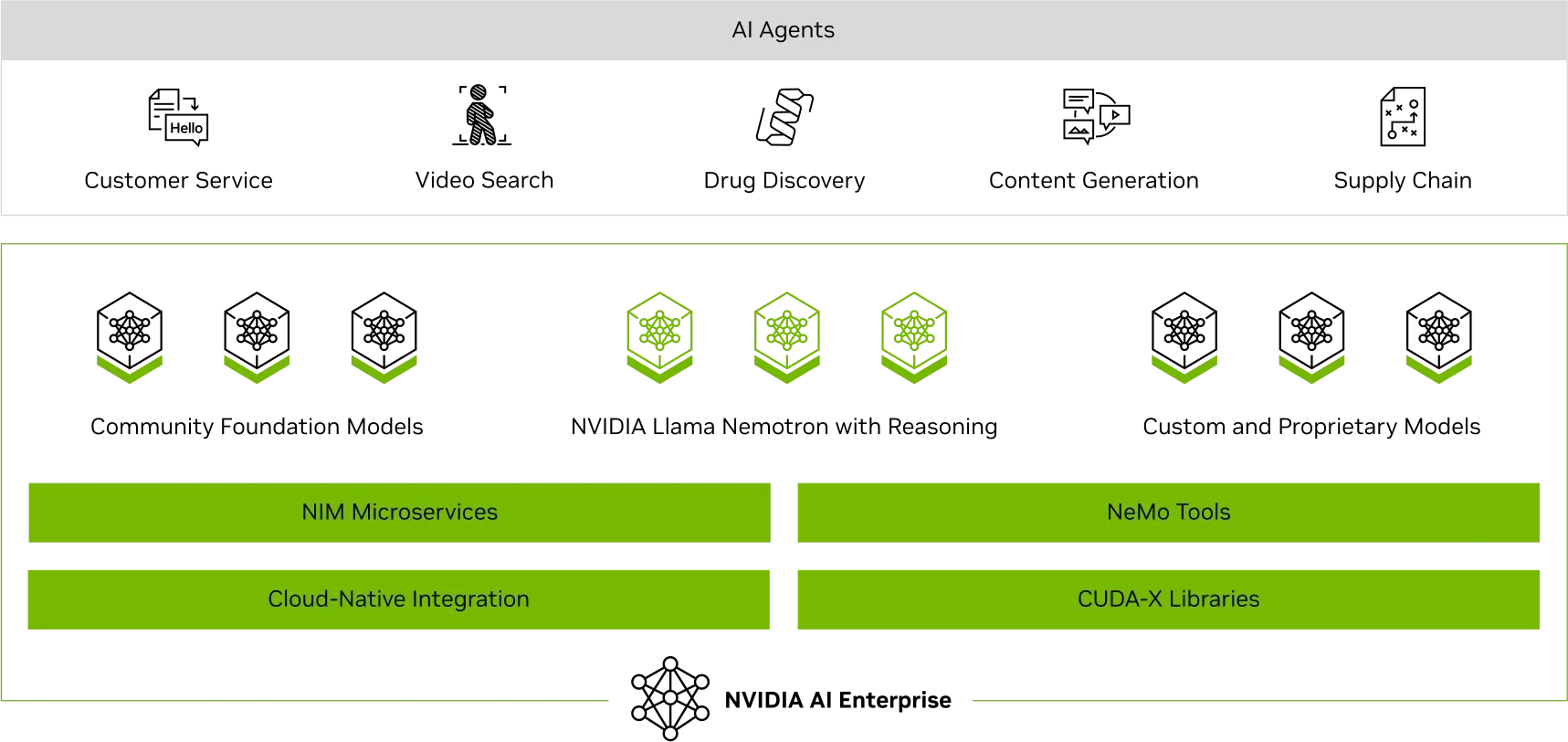

NVIDIA AI Enterprise is a cloud-native suite of software tools, libraries, and frameworks, including NVIDIA NIM and NeMo microservices, that accelerate and simplify the development, deployment, and scaling of AI applications. NVIDIA AI Enterprise helps to accelerate time to market and reduce infrastructure costs while ensuring reliable, secure, and scalable AI operations.

At GIGABYTE, we bring this software ecosystem to life with our high-performance GPU server platforms. From single-node systems to multi-rack deployments such as GIGAPOD, our solutions are validated for NVIDIA AI Enterprise, ensuring seamless integration and reliable operation. With GIGABYTE’s expertise in system design, planning, and validation, enterprises can adopt NVIDIA AI Enterprise with minimal effort and focus on building generative AI applications, accelerating AI adoption, and modernizing their data centers.

Learn more about GIGAPOD – AI Supercomputing Solution

GIGAPOD Deployment Process

Production-Ready Software for Agentic AI

NVIDIA AI Enterprise Cloud-Native Suite

Base Command Manager

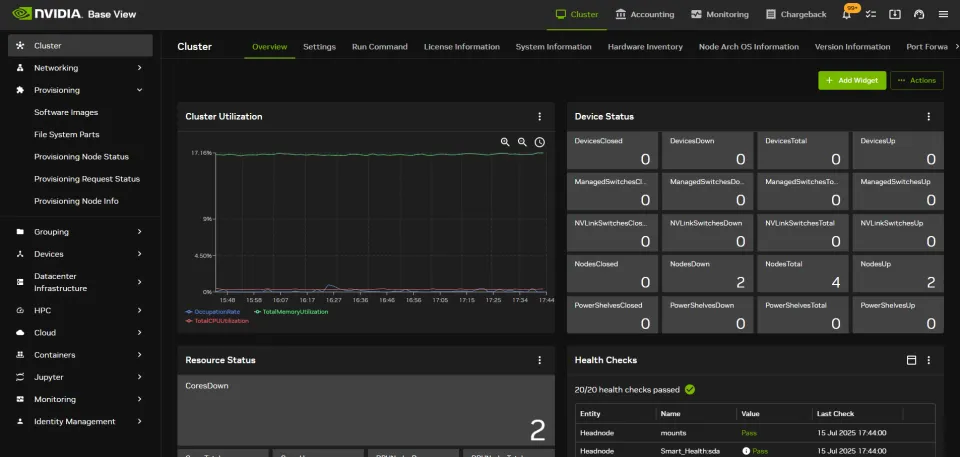

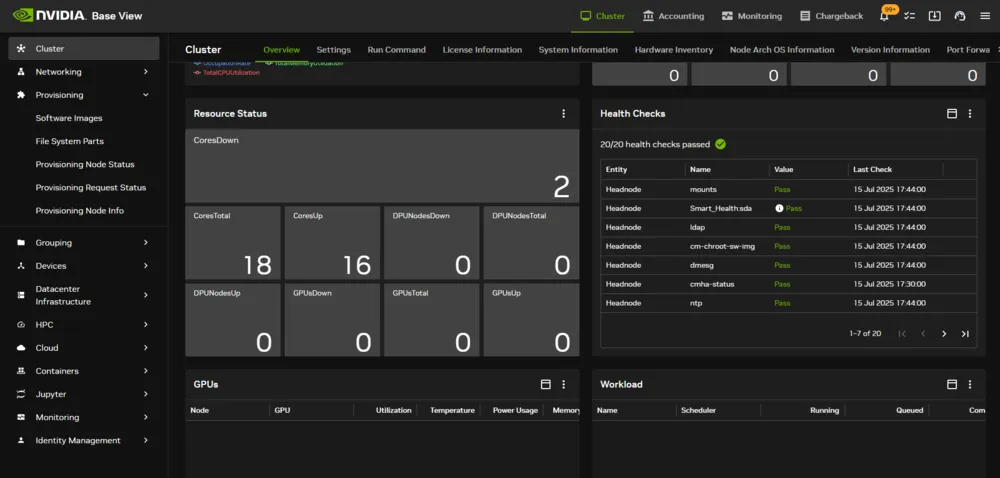

NVIDIA Base Command Manager software streamlines infrastructure provisioning, workload management, and resource monitoring across data center and cloud. Built for data science, it facilitates deployment of AI workload management tools and enables dynamic scaling and policy-based resource allocation. It also ensures cluster integrity, and reports on cluster usage by project or application, enabling chargeback and accounting.

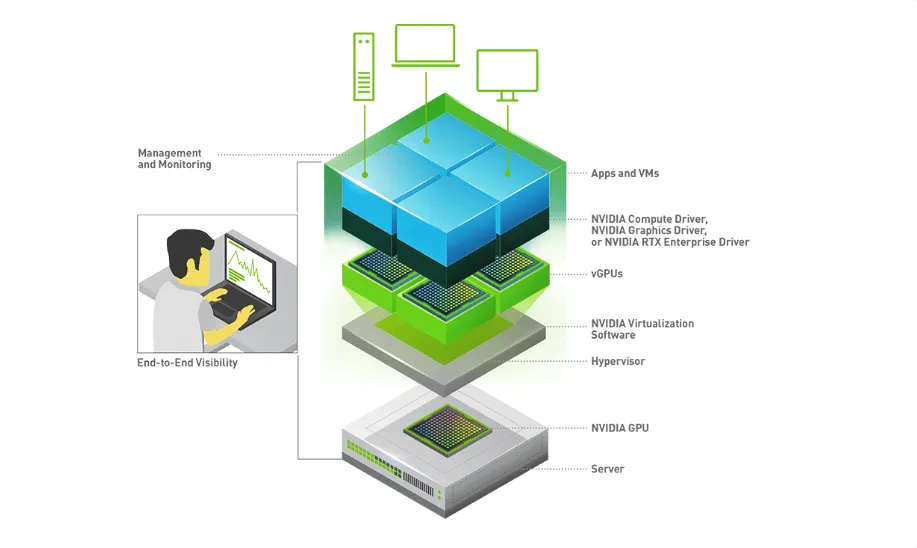

NVIDIA Virtual GPU (C-series)

NVIDIA Virtual GPU (C-Series) software enables multiple virtual machines (VMs) to have simultaneous, direct access to a single physical GPU while maintaining the high-performance compute capabilities required for AI model training, fine tuning, and inference workloads.

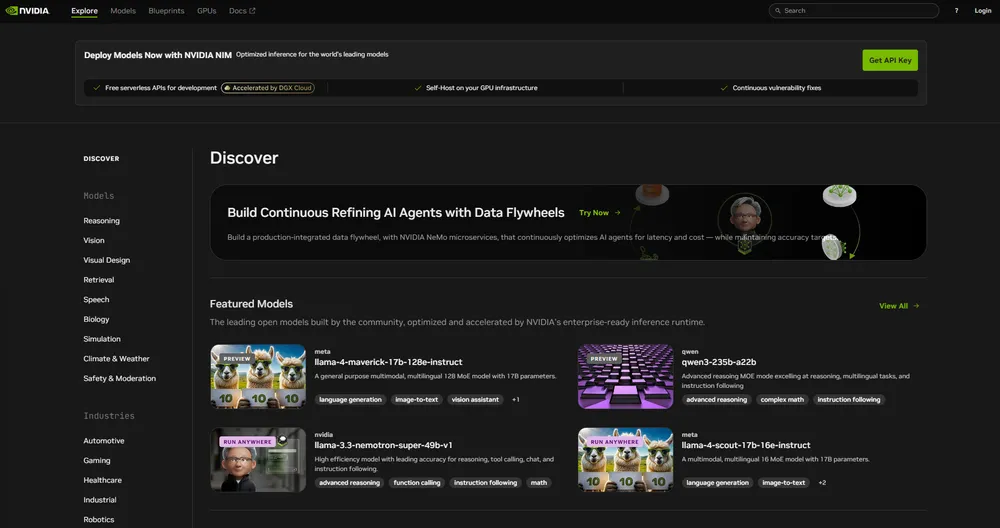

NVIDIA NIM Microservices

NVIDIA NIM microservices are containerized inference microservices provided by NVIDIA, allowing enterprises to quickly deploy large language models (LLMs) and various AI models. Applications can be integrated through simple API calls.

NVIDIA AI Blueprints

NVIDIA AI Blueprints are reference architectures from NVIDIA that help enterprises design and implement end-to-end generative AI solutions. They are built using NIM microservices as the inferencing components and provide a starting point to build AI applications.

End-to-End AI with GIGABYTE

We help enterprises build a scalable AI computing architecture from the ground up.

- Infrastructure Level: GIGAPOD solution leverages NVIDIA Base Command Manager to provide unified cluster-wide management with flexible scheduling and real-time monitoring

- Virtualization: Support for NVIDIA Virtual GPU (C-series) to enable efficient, secure multi-tenancy on VMware and other platforms

- AI Deployment: NVIDIA NIM microservices integrated on GIGABYTE systems to activate LLMs and inference services quickly

- Application Development: Reference-ready architectures with NVIDIA AI Blueprints, supported by GIGABYTE hardware integration and technical services

With GIGABYTE AI Solutions, enterprises get more than hardware. We provide one-stop services from system integration and validation to long-term support, ensuring stable, high-performance, and future-ready AI infrastructure.

Deep Dive into NVIDIA AI Enterprise Features

NVIDIA AI Enterprise and -BCM

NVIDIA Base Command Manager streamlines infrastructure provisioning, workload management, and resource monitoring. It provides all the tools you need to deploy and manage an AI data center.

Centralized GPU Cluster Resource Management

- Unified management of multiple GPU servers and user tasks

- Supports automated allocation of GPU, CPU, memory, and storage resources

- Provides CLI and API interfaces for easy automation and system integration

Real-Time Monitoring and Workflow Visualization

- Displays key metrics such as GPU utilization, temperature, and power consumption in real time

- Works with Base Command Portal to provide complete task history and monitoring logs

- Group Firmware Upgrade: Batch upgrade servers based on model and cluster

NVIDIA NIM

NVIDIA NIM microservices enable enterprises to securely deploy and scale AI models with ease. By using standardized APIs and containerized services, businesses can quickly activate LLMs and other AI foundation models to power high performance generative AI capabilities.

Ready-to-Use Inference Microservice Architecture

- Pre-packaged with commonly used AI models such as LLMs, computer vision (CV), and automatic speech recognition (ASR), available on demand

- Deployed via REST APIs, enabling quick integration with existing applications

- Containerized architecture supports deployment on bare-metal, virtual machines, or cloud environments

Simplified AI Application Deployment Process

- Eliminates the complexity of model development, training, and serving, lowering the barrier to adoption

- Automatically manages inference resources to enhance deployment and scalability efficiency

- Works with NVIDIA Blueprints to quickly build end-to-end generative AI solutions

NVIDIA Virtual GPU (C-Series)

NVIDIA Virtual GPU (C-Series) enables multiple virtual machines (VMs) to have simultaneous, direct access to a single physical GPU while maintaining the high-performance compute capabilities required for AI model training, fine tuning, and inference workloads. By distributing GPU resources efficiently across multiple VMs, vGPU (C-Series) optimizes utilization and lowers overall hardware costs, making it especially beneficial for large-scale and highly flexible environments.

GPU Virtualization for Enhanced Resource Flexibility

- Flexibly allocates physical GPU resources to multiple virtual machines

- Supports multi-tenancy and resource isolation to ensure security and stable performance

- Improves hardware utilization and reduces total cost of ownership (TCO)

Seamless Integration with Existing Virtualization Environments

- Compatible with leading hypervisors, requiring no changes to existing management workflow

- Works alongside enterprise tools such as vMotion and features like High Availability (HA)

- Supports integration with NVIDIA NGC and ecosystem toolchains to accelerate AI adoption